K8s CRD size limits

Size concerns when deploying a large software project on k8s

Working on a large k8s deployment project for OpenStack we've hit a few limits with our k8s operators. I recently decided to take a closer look at CRD size limits, how to properly calculate them, and what to watch out for in both the short and long term.

How we use Custom Resource Definitions

A Custom Resource Definition (CRD) is a way you can extend the k8s API and we use it heavily to deploy OpenStack. One of our deployment goals has been to streamline deployment around Controlplane and Dataplane concepts. This split works well since the Controlplane's OpenStack services get deployed natively on k8s via operators and the Dataplane services get deployed on RHEL via Ansible. The Dataplane has a few different CRDs to help drive Ansible via an Operator native workflow. The Controlplane CRD encapsulates the configuration, scaling, orchestration, and updating of over 20 "service operators". Our Controlplane CRD has ended up growing quite large so it is the one this article will focus on.

The metadata.annotations limit

The first limit we hit with the ControlPlane was:

metadata.annotations: Too long: must have at most 262144 bytes

This had to do with the size limit on annotations in k8s (262144 bytes) and the fact that our k8s operators all default to using the kubectl default for client side apply. When using client side apply to create or update a CRD it stores a full version of that CRD in an annotation. This is so it can compare it for future updates. This isn't strictly a CRD size limit, though, and can be avoided by simply using --server-side with 'kubectl apply' commands. It also doesn't get triggered if you deploy your CRDs via OLM (Operator Lifecycle Manager) which is our primary deployment use case. Our CRD size continued to grow...

The Etcd limit

Search online 'what is the CRD size limit' and you'll likely get an answer around 1MB. Keep digging and you'll find that the actual limit depends on how your Etcd cluster gets configured when deployed. The upstream default request size limit for etcd is currently 1.5MB https://etcd.io/docs/v3.5/dev-guide/limit/. The Red Hat OpenShift k8s distribution happens to use this same limit. OpenShift also does not support changing this limit. This is the hard limit on CRD size. Also, It appears the k8s API also checks for this same limit but it is the underlying etcd storage limit which is the driver.

So this is the limit, but how close are we to actually hitting it?

Checking CRD size: Yaml vs Json

Our stock Yaml version of a Controlplane is around 900k (with descriptions disabled). But that isn't how etcd stores it:

$ etcdctl get "/kubernetes.io/apiextensions.k8s.io/customresourcedefinitions/openstackcontrolplanes.core.openstack.org" --print-value-only=true | wc -c

293507

This is because Etcd stores it in Json format without any whitespace. So a better way to check our CRD size quickly on the command line appears to be something like this:

$ yq eval -o=json core.openstack.org_openstackcontrolplanes.yaml | jq -c . | wc -c

291405

It does appear the serialization through the API slightly modifies the Json ordering and size vs what we get on the command line, but the difference isn't significant. Probably good to keep a large buffer anyway when avoiding size limits.

This is good news in that we should have plenty of space now before we hit the 1.5MB limit. Roughly enough space to store 5 versions of our current CRD size. This last part is important because CRD definitions contain multiple versions so we'll need to have enough space to upgrade to a new version at some point.

Our plan is to implement a check to make sure we don't go above a certain threshold. This should ensure we always have enough space for at least 2 versions and can therefore always upgrade from 1 version to the next.

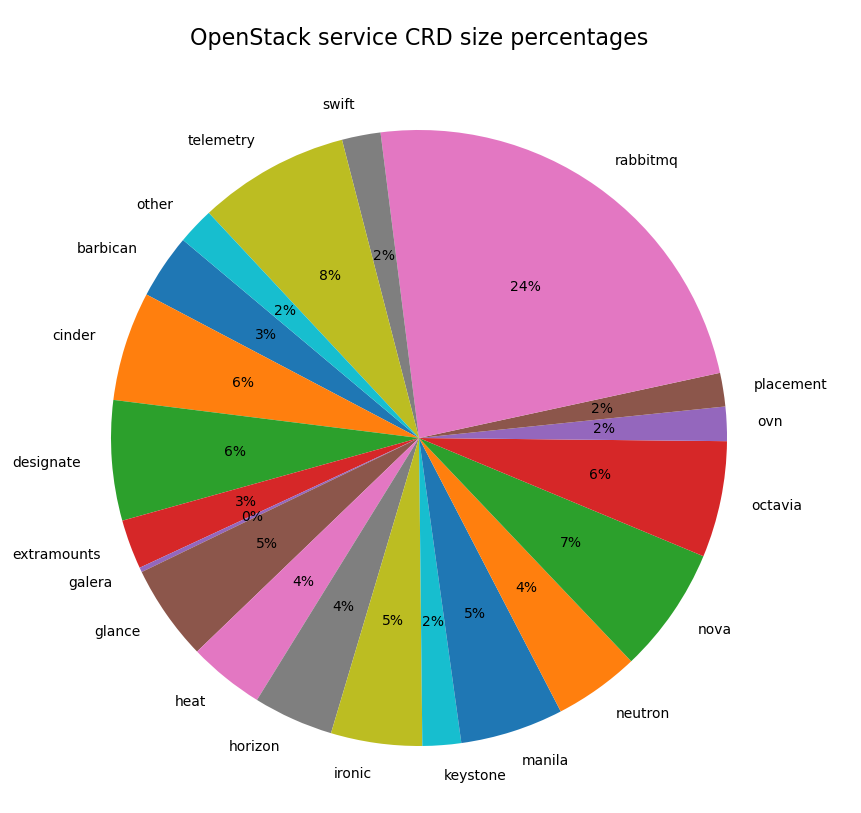

Where does all the space go

The graph below gives a rough idea which CRDs are consuming the most space. As you can clearly see the RabbitMQ struct makes up the biggest chunk in our ControlPlane CRD. This is because the rabbitmq-operator includes a nested podTemplateSpec definition which ends up being quite large. We unfortunately didn't catch how large this struct was until after GA so there are plans to streamline, or possibly get rid of the struct in our ControlPlane CRD when we create a new version. This alone would save a good chunk of space in our CRD.

Note: When generating this graph I rolled entries for some of the smallest services like OpenStackclient, Memcached, TLS configuration, DNS, and Redis into the 'other' category. I also added in the top level extraMounts as the size there is still significant and fits into the analysis below.

Things you can do to minimize CRD size

If you also have gotten into large CRD territory here are some ideas that might help:

- disabling descriptions when generating larger CRDs. Our docs and reference architecture cover the descriptions anyway, so the savings here is significant.

- avoiding the use of large nested structs like podTemplateSpec where possible

- where large nested structs are needed use streamlined versions of them. Our project doesn't need all the fields for extraMounts, for example. In places where podTemplateSpec is used we might also benefit from a more streamlined version of this nested struct.

Summary

The vision for our initial Controlplane was a composition of OpenStack resources. This was largely driven by a desire to simplify the deployment workflow and centrally control orchestration for OpenStack services around minor and major updates.

The good news is for the time being we have space left to grow, add new features, and perform upgrades. And we have some options to shrink things further. But the Controlplane is one of the largest CRDs I've seen and going forward we need to keep a close eye on the size. It comes with the territory though as OpenStack is after all a large project.